Setting and updating svn:ignore

Posted on: April 21, 2010

I’ve found the command line to be the most reliable and simplest way of managing subversion repositories, but also found the svn:ignore property a little more esoteric to set up than I’d like.

svn:ignore is used to specifiy files and folders you don’t want to be part of the version controlled repository for your site or application build. These often include location specific settings files, development or debugging files etc.

For the benefit of others, here’s my run down to getting this working for my user account on a CentOS server.

1. Set up your default text editor for SVN

First, make sure you have a default text editor set up for subversion. The first time svn runs it builds a config directory, which on a linux server, is a directory named ‘.subversion‘ in your home directory.

Navigate into the .subversion directory (/home/youruseraccount/.subversion) and edit the config file. Uncomment the editor-cmd line and add in the name of the editor you’ll be using (in CentOS it’s nano).

Save your changes and then navigate to the directory where your repository resides.

2. Check which files and folders currently aren’t under version control

You can easily see what files aren’t under version control by running

svn status

and looking for the lines prefixed with a ‘?‘.

3. Add exclusion patterns for files and folders

Add svn:ignore properties for the files and / or folders you want subversion to ignore by running the folling command:

svn propedit svn:ignore directory-name

where directory-name is the directory you want to apply the changes to.

This should open the :ignore properties file in the text editor you specified. You can then enter file and folder names and / or patterns you want ignored. Once you’ve saved the changes, run

svn ci

to check in the property changes.

Now if you run

svn status

the file and folders you specified to ignore won’t be listed and won’t be under version control. Voila!

Further resources:

- In: content management | drupal | PHP

- 8 Comments

Drupal’s module installation system makes it very easy to extend and customise Drupal sites and to disable and re-enable modules quickly and non-destructively. However, the default way Drupal’s treats modules can get in the way during development.

When building your own modules, it’s common for database table structure to change as project needs become clearer, or even for modules to be built in a “front to back” fashion – where the admin screens and the UI interaction skeleton is built before the underlying processing and data storage code is developed (or finalised).

If this is the case, you may find yourself building an install file late in module development and then wondering why Drupal seems to dutifully ignore it.

Despite being counter-intuitive, this behaviour has some clear benefits. Previously installed modules may have attached database tables, and these tables may still hold valid application data. Drupal’s behaviour ensures that these tables aren’t destroyed when the module is disabled. The module can then be re-enabled later without any data destruction.

To get a module to run an install file, or to re-install successfully, you need to do more than just disable and re-enable the module on the module list screen (/admin/build/modules). This is beacuse Drupal keeps a record of previously installed modules and won’t reinstall them if they are merely disabled and re-enabled.

The trick to getting your new / updated install file to run is to remove the modules entry from the system database tables. If your module has already created one or more tables, and you want the table structure to be altered or rebuilt, you may also need to remove these tables before re-enabling the module.

Once you’ve disabled your module, removed the related entry from the system table and removed any module-related tables, you can re-enable the module and your install file will be run correctly.

- In: general web

- 1 Comment

In the interests of the entire internets (ie: me) I now post some stats from this site, which has been “active” (in the very loosest sense of the word) for about 18 months.

First up, top 17 posts:

So, basically people are coming here looking for answers to coding problems. Much like what I do with 90% of the blogs I visit. (Note to self: write more of these)

Now – top 11 search terms

| ie7 css filter | 547 |

| javascript function exists | 512 |

| ie7 specific css | 476 |

| tumblr review | 399 |

| javascript check if function exists | 386 |

| mysql insert or update | 188 |

| css filter | 183 |

| javascript check function exists | 178 |

| javascript if function exists | 176 |

| print_r to variable | 150 |

| javascript object property exists | 137 |

Basically lining up with the top 5 posts. No prizes for guessing where most of my traffic comes from.

I’m surprised at how much interest my short review of Tumblr has produced.

Top 15 referrers

Some blogs, a Google Code project I administer, my Twitter account, some comments I’ve left and a few extra bits floating about.

Weirdest Referrer

http://cnn.com/2008/CRIME/04/24/polygamy.raid/index.html

Um, OK.

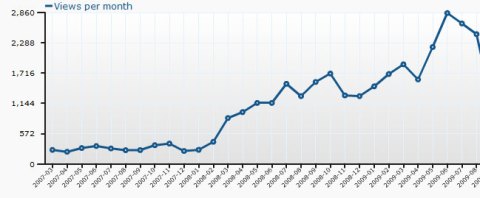

Next – traffic summary

This really is something I should use to convince some clients of the value of frequently updating content and doing something (anything!) to promote their sites – and the traffic to this site is tiny.

As you can see – for the first year I didn’t even do anything with the site and since then I’d be lucky if I average one post a month. Despite this, traffic rises – which goes to show the power of writing things that at least a few people are interested in.

Any stats you care to share? Link them up in the comments.

- In: databases | SQL

- 2 Comments

1. Produce terminal-friendly output

Occasionally you may be using the command line and find yourself limited by the size of either the table you’re getting results from or by the size of the terminal window you’re using. (I find the DPS window particularly resistant to resizing.

You can get results in this format

column1_name: data

column2_name: data

instead of the default tabular layout by using the \G flag at the end of your select statement:

select * from my_table where id = "3"\G

2. Better, more flexible result ordering

Recently I found myself a bit hamstrung by the way a table had been designed. I wanted chronological ordering, but the default ordering by date wasn’t ordering the results exactly chronologically.

I found that I could use functions in the ‘order by’ component of my selects to get better ordering

select * from my_table order by concat(entry_date, entry_time);

(group by could also be used to similar effect)

3. Logging your command line session

Occasionally you may end a command line MySQL session and then think “Argh – I wish I’d saved that query!”. MySQL has a handy logging feature which writes your session (input and output) to a file so you can pull out those tricky queries and keep them for future reference. To do so, log in using the -tee flag:

mysql -u username -p -tee=/path/to/your/log.file

Obviously you need write access to the folder you’re writing to. If the file doesn’t exist, MySQL will create it for you.

Beware: if the file already exists, MySQL will overwrite it with the new session data.

4. Loading .CSV file data into a table using the command line

MySQL has a very simple way to load .csv file data into tables. Once you have the syntax down, I find this a lot easier and ess error-prne than commonly used web-based or GUI tools.

Syntax I’ve used is:

load data infile '/path/to/your.csv' into table my_tables fields terminated by ',' optionally enclosed by '"' lines terminated by '\n';

Works a treat.

5. Cloning tables

This is really simple, but is something I use a lot to create development tables with live data (when necessary).

create table my_new_table as select * from my_old_table;

This does exactly what it looks like – creates an exact renamed copy of the original table (my_old_table in this instance).

Beware: keys and indexes aren’t recreated – you’ll need to add these back in manually.

What about you – any handy MySQL tricks to share?

- In: content management | frameworks | PHP

- 22 Comments

It has somewhat of a steep learning curve, but Drupal is amazing for allowing you to build flexible, complex websites with lots of functionality in very little time.

For a site I’m working on, we had several custom content types coming through the default node template (node.tpl.php) in our customised theme.

This was fine and dandy for quick development, but then the time came to customise the node page for each content type.

First things first, you need a custom node template. Save the node.tpl.php file as node-[your node name].tpl.php and you’ve created a custom node template. (This means if your node type is ‘audio’, you’d save the file as node-audio.tpl.php)

Now we need to customise the template to suit our needs.

If you dive into a standard node template you’ll see something (unhelpful) like this where the content goes:

<div class=”content”>

print($content)

</div>

Um, OK. So how do you get at the functions which compile the content before it gets to this stage? Well, you probably can, but the easier way is to avoid this step and just access the default $node object which drupal gives you access to with each ‘page’.

To find out what’s hiding inside the $node object, simply do something like this:

print_r($node);

(Yes, I like print_r())

The resulting cacophony in your page source will show you all the node attributes and properties you have access to. Chances are you’ll be able to access these directly to create the customised node template you need.

- In: browsers | CSS

- 2 Comments

Firefox, Opera, and webkit based browsers (Safari, Chrome) are all purported to be the shizzle when it comes to standards support, but that doesn’t mean they all render the same.

This following CSS chestnut will allow you to address any tweaks you need to make for webkit based browsers without affecting any others:

@media screen and (-webkit-min-device-pixel-ratio:0){

/* webkit rules go here */

}

- In: (x)HTML

- 2 Comments

I ran across some odd Subversion / Samba errors lately. It seemed whenever we added an image or non-text file to the repository, we were getting the error:

Commit succeeded, but other errors follow:

Error bumping revisions post-commit (details follow):

In directory '[dir name]'

Error processing command 'committed' in '[dir name]'

Can't move

'[dir name]\.svn\props\[image name].gif.svn-work' to

'[dir name]\.svn\prop-base\[image name].gif.svn-b...\

Access is denied.

It looked like a subversion issue at first – but actually it’s not, it’s a Samba permissions issue. I share this to possibly spare someone else the pain of chasing this down.

Short answer – make some small edits to smb.conf, restart samba and the problem goes away.

The full solution is here.

- In: (x)HTML

- 3 Comments

The Present

Netbooks have been a game changer in the connected world. The arrival and staggering popularity of Netbooks has turned the PC manufacturing world on it’s collective head.

This is not an article about Netbooks – this is an article about the age that they herald – the age of cloud computing.

Netbooks have filled a latent demand – the demand for practical, truly portable computing. They offer ‘enough’ processing power mixed with a small form factor, light weight and long battery life for maximum portability – but that’s not all. Netbooks have been the market force which has seen the adoption of cloud computing as the clear contender for the future of computing worldwide.

The Netbook revolution has highlighted one thing very clearly:

the majority of our time and activities while on a computer are spent online.

Email, socialising, image manipulation, video, image sharing and even software development are all examples of activities once tied to the desktop which are now easily and freely available via online websites and applications.

Netbook popularity centres around one key thing – the near ubiquitous avaiability of internet connectivity. Internet connectivity shifts the processing and storage burden away from the client machine and into the cloud, hence the machine can cut weight and costs and increase battery life by running cheaper, slower and more efficient hardware.

The Near Future

The pent-up demand for a decent, truly mobile internet experience (phone, pda, laptop / desktop) has been unleashed with the growth of devices such as the iPhone and other 3G devices. Netbooks have filled the void between tiny phone screen and large home / work machine by providing a comfortable in-between.

Companies such as Adobe and Amazon are banking on the demand for services in the cloud by building infrastructure and applications native to cloud computing. Microsoft even has a development cloud-OS in the works. As online activity increases and lower powered computing becomes the new norm, demand for software will decrease and demand for online services and application will increase proportionately.

A recent Wired article hinted at the next stage of this (r)evolution by stating that a gaming company is building hardware and software to run MMPORG games on their servers and streaming only the needed vectors to the client machine.

The ultimate thin-client world approaches.

The Not-So-Distant future

Let me paint a picture of where I think this is all heading.

In the cloud-future, hardware specs will become largely irrelevant for anything other than servers, as all client machines (phones, PDA’s, tablets, laptops, desktops (if they still exist)) are based around four things:

- a screen

- a basic processor

- ubiquitous internet connectivity (wireless, 3G)

- an RDP client (or similar)

I didn’t include an operating system – one will still be required, but it’s function will be just to switch on and run the RDP client – your data, your apps and even your OS will live in the cloud. Your cloud space will adjust visually according to the device your using to access it.

Your ‘machine’ will be an allocated chunk of secure, virtualised server space running whatever OS you like (or more than one if you like). You run your machine/s the same way a network admin does who needs to connect to remote servers. Connectivity is everywhere and speeds are high, so lag is mostly a non-issue.

Due to its simplicity, your client hardware becomes easily replacable – and everywhere. People own multiple variants of devices which can access your cloud computing ‘home’ and use whatever is most convenient at the time.

Client power consumption plummets as devices are far less power hungry. Server farms increase exponentially to meet the huge demand for cloud storage.

Privacy issues are potentailly huge as ‘offline’ no longer really exists and cloud-hosted personal spaces are far easier for hackers and government to browse. Forget ISP’s relaesing your browsing data to the authorities, server based spaces may offer Big Brother the ability to take a long gaze at not only your documents, files and past activity, but whatever you’re doing right now.

Encryption will become a bigger issue (an issue that’s largely been forgotten in the web 2.0 world outside of the banking sector) as people move to the ubiquity and convenience of the cloud but still want the perception of security that haviong all their data at home gives them. Most apps (even those in eternal beta) will move to https or relevant encryption. Transparent storage-based encryption and private keys will become commonplace.

Hardware is commoditised – the data plan will be the real money spinner. This is already happening now, with some countries offering a free Netbook with a fixed data plan.

Online archiving ‘warehouses’ will spring up – offering ongoing archiving and storage of your old data to free up your working space.

You live in the cloud.

Thoughts?

There’s a great run-down on Drupal.org on how to troubleshoot the dreaded Drupal ‘White Screen of Death’ (WSOD).

This seems to happen every time I add a site to our test server – I find increasing the PHP memory limit to be the fastest way to solve it.

To increase the memory limit assigned to your Drupla install, add this to your /sites/default/settings.php file:

ini_set('memory_limit', '32M');

recent comments